Search engines, social networks, cloud business platforms and even streaming live television, they all have one thing in common – the compute, storage & network fabric that makes them possible. The fabric, is a hyper scaled array of millions of servers & storage devices clustered in some remote location and all such clusters connected through warp speed dark fiber. If data is the oil, this fabric is the offshore drilling platform and if data is the product, this fabric is the manufacturing plant!

Interestingly, manufacturing plants or the offshore drilling platforms kept evolving over the last century or so – getting faster, better, smarter over time. Automation, Interconnection and Intelligence became the hallmark of 21st century industry, and the trend aptly termed as fourth industrial revolution or Industry 4.0. The compute fabric, we talked about in the previous section, played an important role in automation, interconnection and intelligence of the modern day industry. Now flipping the narrative on it’s head – did the industry play an important role in development of compute fabric? Cloud computing has been an enabler of Industry 4.0 however is Industry 4.0 paradigm being applied to the evolution of compute fabric to be faster, better and smarter? Is there a way to design , commission and operate hyper scaled compute infrastructure at 2X or 5X speed and run it a higher operating efficiency?

Let’s examine the large, super large, hyper scale data centers running the cloud, search, business platforms and social networking engines. They are primarily comprised of the following

Compute, Storage, Network (CSN) – Millions of racks, servers, networking equipment, networked storage, electrical power, sense and control equipment amongst many other devices to enable efficient, uninterrupted operations

Balance of Plant (BOP) – Civil structures to house the CSN components, HVAC, electrical grid and standby power distribution/generation components, security components, material handling equipment- basically everything outside of CSN

The process by which such hyper scale data centers, come to life and are upgraded/refreshed is complex involving thousands of employees and contractors, material suppliers, EPC (Engineer, procure, construct) companies and take a few years from plan to commission. Some of the largest data centers cost couple billion dollars to stand up and a few hundred million as the annual operating costs.

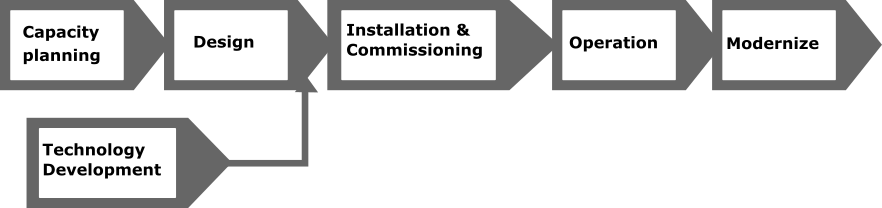

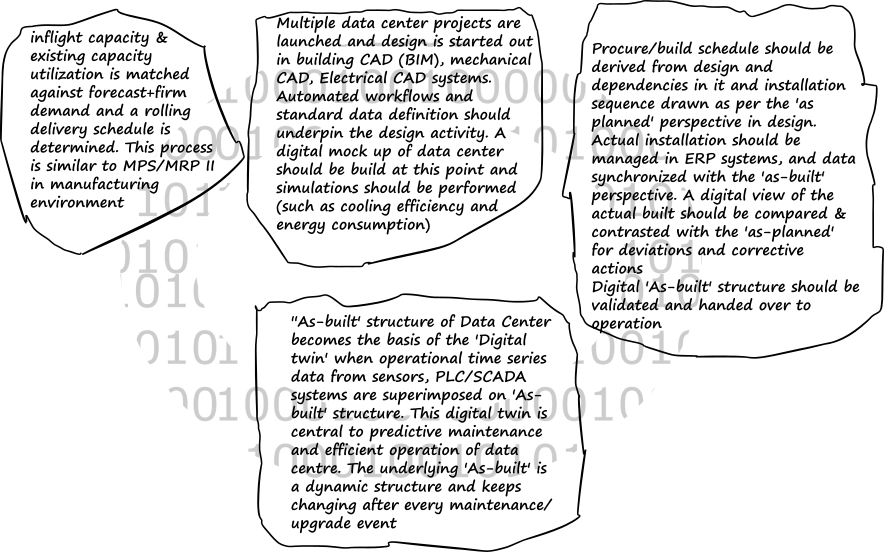

Process starts with various levels of compute power firm and forecast demand aggregation, and arriving at optimal & rolling capacity delivery schedule.

And then a detailed design of CSN and BOP components is undertaken (or design templates from previous iteration are used as starting point). Here we want to draw the distinction between design of key compute components (such as ASIC customized for heavy machine learning workloads) which might have been a parallel and perpetual R&D effort and the design of data center super structure from the perspective of spatial arrangement of columns and rows of racks, cable routing, network capacity, site selection, civil structures and HVAC.

Construction of data center facility, procurement or manufacture (make or buy) of the different components, and installation as per the technical sequence or logistical decisions, is as complex and dynamic as any other large industrial complex such as a manufacturing plant or offshore oil production platform. At this point, the validation, certification and handover-to-operations happens, as part of commissioning

In the operate phase of data center lifecycle, focus is on maintaining the operating efficiency from a energy consumption, availability, sustainability and cost perspective.

At some point in time, major refreshes/upgrades are undertaken, especially when the technology changes (such as a new ASIC with higher compute power in the same form factor comes along) or new data centers are added within the same facility

Digital thread is a process and technology transformation paradigm that aims to achieve better integration and seamless, lossless information flow to aid in better and faster decision making at various stages of a product’s creation and consumption. Digital twin is a precise digital equivalent of a complex product, that allows for higher efficiency in operations and prediction of adverse events and also informs the product design function of the real world operating behavior to guide the design process.

Lifecycle of data centers, can be better managed and the hyper scalers can achieve the cherished goals of Industry 4.0 by the application of Digital thread & twin paradigm. Digital thread and twin paradigm have long been deployed in complex engineering industries such as aerospace or power generation

We will not get into the detailed business process, data design and technology applications, that digital thread & twin transformation entails, as it deserves a dedicated post of it’s own (which I intend to write sometime soon), but the end state of data center lifecycle post application of digital thread & twin principles is proposed in the below schematic

In conclusion, I believe that the Digital thread and twin paradigm can be applied to data center lifecycle as much as it is being applied to manufacturing, industrial environment to achieve higher efficiency and competitive advantage. We are forecasting a quantum jump in the compute capacity demanded of the hyperscalers, due to widespread cloud adoption, IOT device injection in the customer and enterprise workflows, autonomous vehicle and 5G edge applications. This would need the hyperscalers to add compute power faster than ever and achieve sub linear maintenance cost trend for the same or higher availability. Digital thread & twin can help the hyperscalers achieve faster build, better operating efficiency and ability to drive complex use cases/workloads

Thank you for the fascinating concept. One can draw parallels with large capital assets like turbines and the supporting DCS controlled power-plants! Do you consider this scenario akin to “Assemble to Order” where the constituent parts are procured/bought and the Owner-Operator constructs, configures/assembles the product (Data Center)?

The model would also seem to be multi-disciplinary (subs-systems like HVAC, Power etc.) so can be a great play for Modeling Based Systems engineering as well to complete the analogy with Powerplants 🙂

Hello Sunil

Depending on the scale, it could range from assemble-to-order (ATO) to engineered-to-order (ETO). There has been a shift towards ETO as more and more hyperscalers have been moving towards purpose built compute cores. Model based system engineering- absolutely! Once the basic building blocks of the lifecycle orchestration (PDM, PLM, DT) are in place, the necessity and usage of models driving the design of new data centers , will emerge. Thanks

BR

Awadhesh

Comments are closed.